Detalhes do funcionamento na lousa

Self-Attention

onde:

- Cada palavra cria uma query e recebe keys e values das demais palavras.

- Dot product

- Os values são então somados ponderadamente, produzindo uma representação que leva em conta todas as palavras relevantes.

Mecanismo self-attention. Fonte:Jeremy Jordan.

Self-Attention

| Elemento | Intuição | Como aparece na prática? |

|---|---|---|

| Queries (Q) | Pergunta: Cada palavra da frase está “fazendo uma pergunta” sobre quais outras palavras ela quer saber. | Vetor que representa a própria palavra, gerado por multiplicação do embedding pela matriz |

| Keys (K) | Chaves de um armário: As demais palavras têm “chaves” que podem ser comparadas com as perguntas. Se uma chave for semelhante à pergunta, ela “abre” a porta para a informação relevante. | Vetor gerado pela mesma palavra, mas usando |

| Values (V) | Conteúdo guardado nas portas: Quando a porta abre, o que vem dentro é a informação que a palavra quer transmitir à pergunta. | Vetor resultante da multiplicação do embedding por |

Analogia com o YouTube

- Query (Q): o vídeo que você está assistindo agora funciona como a "pergunta". Ele pede informações sobre quais outros vídeos podem ser relevantes.

- Key (K): Cada vídeo em sua lista de recomendações tem um “título + descrição” que atua como uma chave; o algoritmo compara essa chave com a pergunta para ver quão semelhante é.

- Value (V): Quando a chave corresponde, o valor é o próprio vídeo (ou seu link). Ele contém tudo o que você recebe: título, thumbnail, descrição, etc.

Resumo e Próximos Passos

- Objetivo: Permitir que cada token acesse e combine informação de todas as posições da sequência simultaneamente.

- Queries (Q): Vetores “perguntas” gerados a partir do próprio token.

- Keys (K): Vetores “chaves” que representam o conteúdo de cada token na mesma sequência.

- Values (V): Vetores contendo a informação real que será combinada.

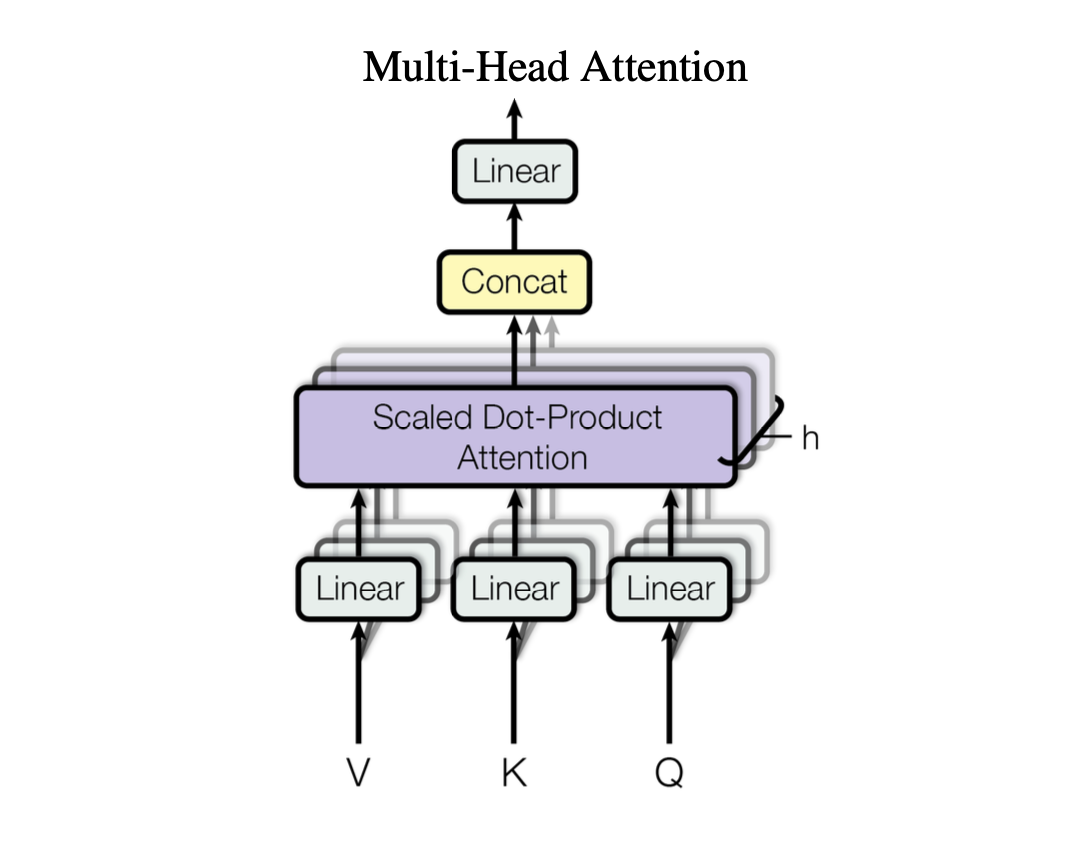

- Próximos Passos: Compreender Multi‑Head Attention

Multi-Head Attention. Fonte: Jeremy Jordan.